X and Discord are two platforms who take a stand against users spreading misinformation on their sites and applications. X, formerly known as Twitter, allows users to post media within guidelines while Discord works as a messaging application that has similar rules of its own.

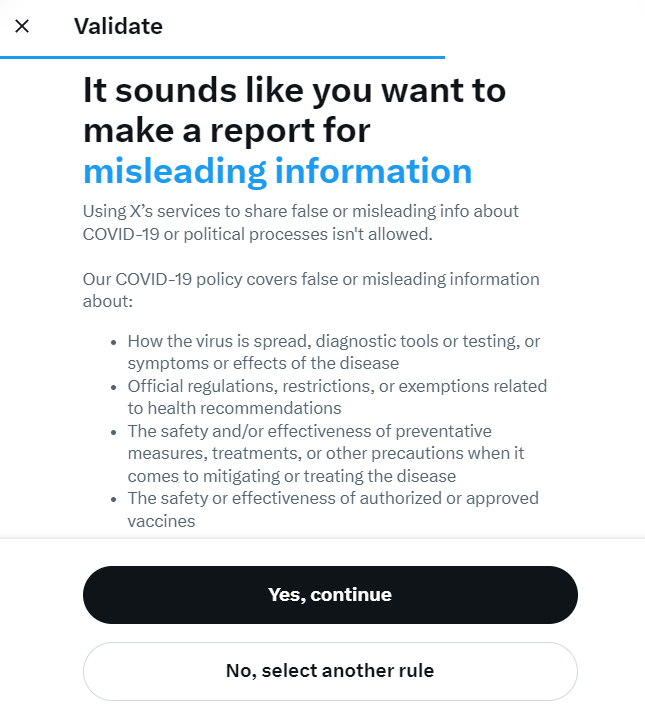

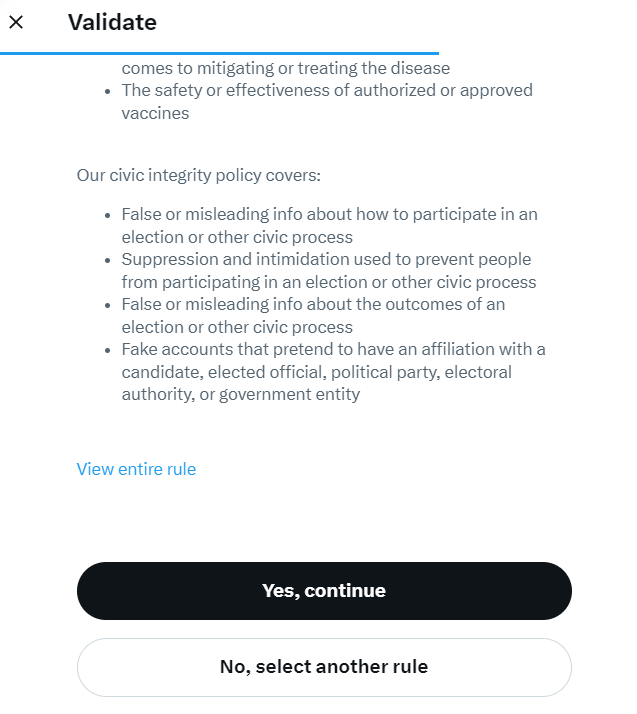

X makes efforts to curb misinformation under their Crisis misinformation policy, from August 2022, “We will take action on accounts that use X’s services to share false or misleading information that could bring harm to crisis-affected populations.” During times with dire situations at hand, it is especially important that incorrect information is demolished before it spreads any further. For example, it would be against X’s policy for a user to claim vaccines will result in being plagued in bad luck and will leave your bloodline cursed for generations as jest.

Users on the site have a total of two strikes when it comes to misinformation. At this point of the process, it might be worth it for X to consider having the accounts manually checked to ensure they aren’t bot accounts. It might just be someone who is mistaken, or confused.

In April 2023, X prohibited synthetic and manipulated media, adding it to the guidelines addressing users not to post or share. Accounts that do will have their posts labeled and possibly locked to cease causing further confusion.

With a background at Twitter’s Fraud and Risk Operations, Alex Anderson, current Senior Platform Policy Specialist and Expert of Platform Integrity, published a blog post to Discord on February 25th, 2022.

This blog post‘s purpose was to notify users regarding an update to Discord’s Community Guidelines. The post outlines an addition to the terms stating, “..users may not share false or misleading information on Discord that is likely to cause physical or societal harm.” It is evident Discord is concerned about the wellbeing of its users.

The actual phrase outlined in the guidelines reads, “Content that is false, misleading, and can lead to significant risk of physical or societal harm may not be shared on Discord.” Incorrect statements made about public health, disease, and vaccines are not allowed. In summary and in short, users are prohibited from spreading health misinformation.

If any misinformation is spotted on the application, the article provides directions to report it here.

Digital misinformation is harmful because it hinders people’s ability to come to well-reasoned conclusions — and it does so at a rate faster than what is possible with in-person dialogue.

Alex Anderson

If Discord wanted to go a step further to prevent the spread of misinformation they could implement a blacklist of fake news websites, as recommended by Lyel under the feedback section. This post was discovered after I searched if this was an implemented feature, although it appears moderation is not interested in the idea, but I can see how this would be lower on their list of priorities.

On October 28th, 2022 a Transparency Report from April to June of the same year was published on the aforementioned blog. The report relayed the termination of 270 accounts and 73 servers on account of misinformation. While it is wonderful that over 70 large groups spreading incorrect information have disbanded, leading to less contradictions, these numbers seem concerningly low, as there 19 million servers on Discord.

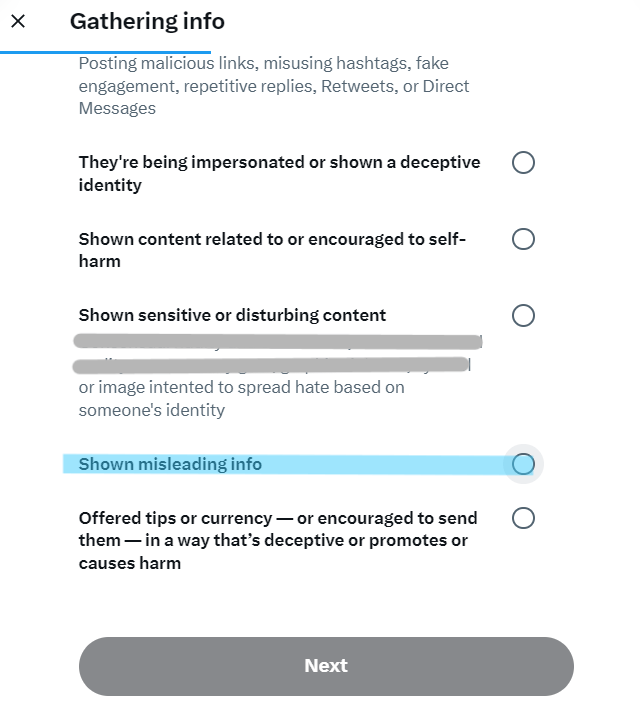

While both Applications are working towards preventing the spread of misinformation, more accessible and readily available tools to report such things should exist. Multiple steps sending users in different directions and circles isn’t exactly helping anyone.

For example, I went to falsely report the first post I saw on Twitter to view the steps without submitting anything. It was made clear that reporting misleading information was only important if it was related to health or politics.

It’s terrific these companies are striving to eradicate untrue health and political information, but why stop there?

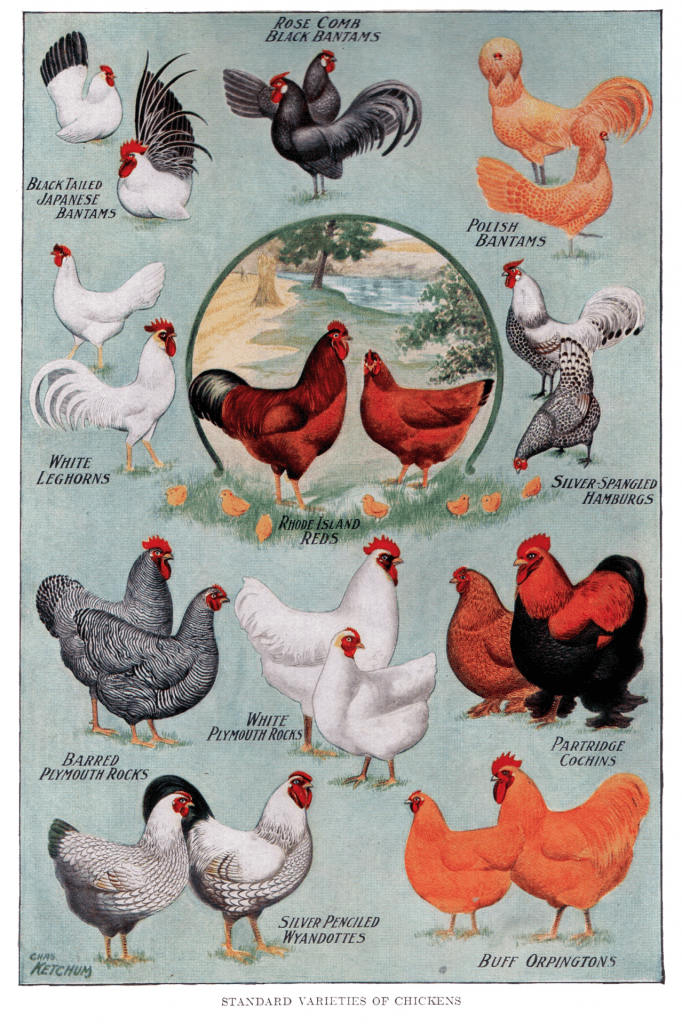

One afternoon about ten years ago, when sites were less moderated, I changed the information of several Wikipedia pages. Our class was instructed to research the variance chicken breeds but were warned against using that site. So, instead of a Silkie’s egg color reading cream or tinted, I inputted something along the line of light blue with pink speckles. Present day I cannot recall my motives, maybe it served its purpose for this anecdote here.

I believed the misinformation to be unbelievable at the time, yet some in my class wrote it down even though it wasn’t to be used as a source. I recall the confusion in class. But, in modern day, sites are much more heavily moderated.

However, on X and Discord there is not much stopping occurrences like that. And some might even be potentially more harmful.